AI Risk Management: Using Great Power Responsibly

-

January 19, 2026

-

Artificial intelligence (“AI”) is reshaping every corner of banking, from the front office to back-end operations. Its power lies in amplifying speed, precision and insight, enabling banks to reimagine how they operate and serve customers. But, with this great power comes the need for thoughtful governance and internal controls.

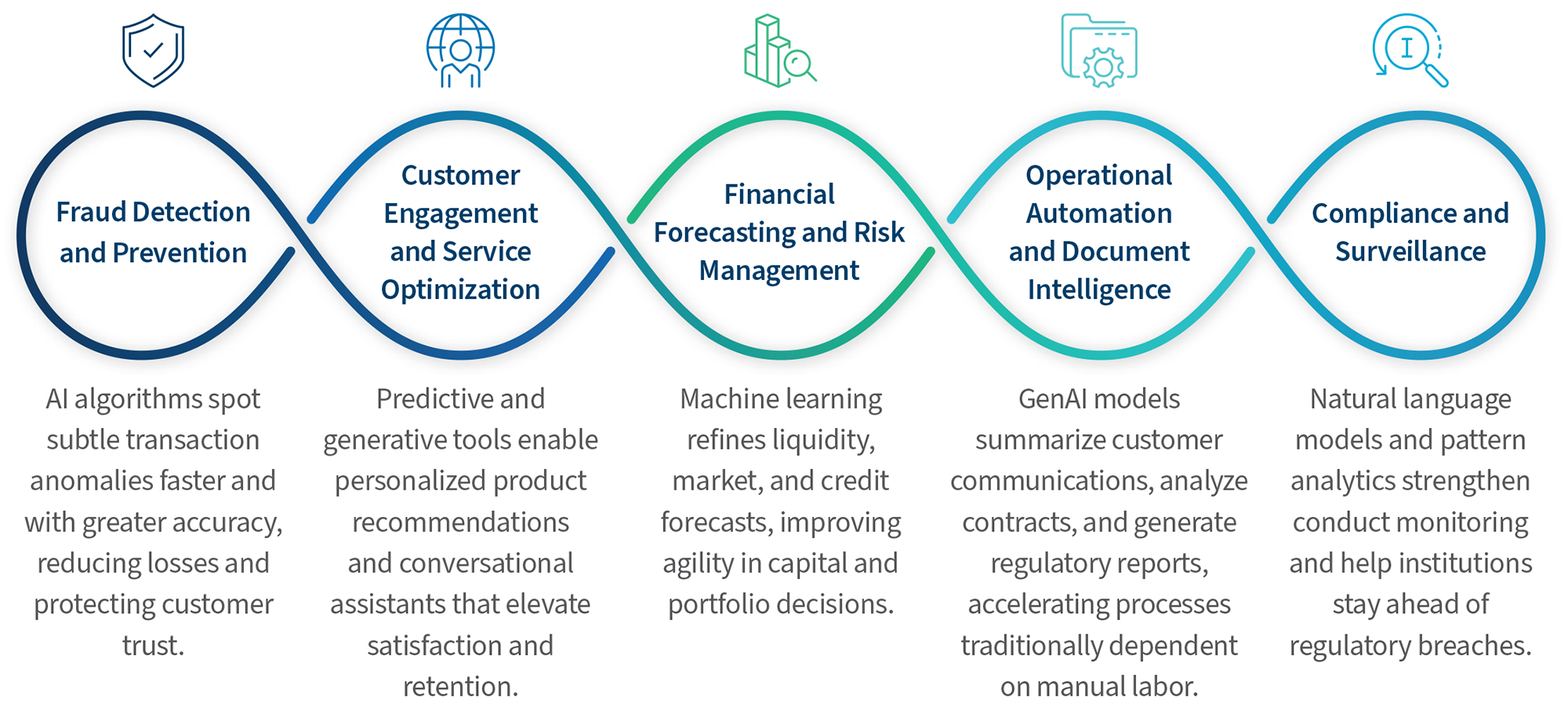

Across the banking industry, leading AI use cases demonstrate both promise and complexity:

Each of these use cases magnifies efficiency and insight, but also introduces key questions on accountability, transparency and trust. Without the right foundation and controls, the same algorithms that empower progress can create model governance gaps, data integrity failures, heightened cybersecurity threats and regulatory non-compliance. Left unchecked, these can strike at the heart of customer and regulator confidence, reminding leaders that sustainable progress requires responsible stewardship of AI’s immense potential, keeping humans in the loop.

Key Risk Areas To Watch

AI introduces a powerful, but double-edged dynamic for financial institutions. The technology’s ability to automate, predict and generate insight at scale also magnifies exposure to data misuse, bias and operational disruption. In highly regulated industries like banking, the consequences of error are amplified. For example, failures in AI-enabled decisioning systems or AI models can trigger compliance violations, financial losses and reputational damage within hours. As they grow more autonomous and generative, the boundary between human oversight and algorithmic control becomes both thinner and more critical. In short, governance must evolve as quickly as the technology itself. To that end, consider the following categories of risk to keep top of mind as you build or evolve your AI governance program:

- Data and model integrity risks – Incomplete, biased or poor-quality data erode model reliability. Poor model architecture-level behavior can lead to hallucination and fabrication of output that appears credible but is factually wrong.

- Compliance and fairness risks – AI’s opacity challenges explainability and can conceal bias or discriminatory effects, drawing regulatory scrutiny across consumer protection, lending and privacy domains.

- Operational and technology risks – Unmonitored automation can create cascading failures in workflows, while complex model dependencies heighten system fragility.

- Cybersecurity and third-party risks – A broad landscape that encompasses attacks such as training data poisoning, retrieval-augmented generation (“RAG”) vector store leakage, output manipulation via repeated queries, and model exfiltration through side-channel attacks. Additionally, model interfaces, shared application programming interfaces and open source code expand the attack surface, exposing banks to prompt injection, data leakage and intellectual property theft.

- Ethical and trust risks – Overreliance on AI judgment, weak accountability or manipulative use of GenAI outputs can damage customer and employee trust, an intangible but vital asset for every bank.

Each risk area underscores the same truth: the transformative power of AI must be balanced by uncompromising governance and sustained human oversight.

Making the Guardrails Visible

To capture AI’s potential power responsibly, banks must anchor innovation in strong foundational enablers. These “AI enablers” – e.g., data integrity, governance, human oversight and technological resilience – form the bridge between intention and accountability. These enablers ensure that power is balanced with prudence, a theme that must define the future of responsible AI adoption in banking. Prior to deploying AI solutions, banks should consider the following:

- Data governance and quality: High-caliber data reduces systemic bias and supports transparency.

- Model risk management: Adaptive frameworks that evolve with continuously learning models and GenAI outputs, including inventorying, tiering and validating models per supervisory expectations.

- Infrastructure modernization: Secure, scalable environments for model orchestration and monitoring.

- Ethical and organizational alignment: Clear accountability for every stage of AI-led decision-making.

- Regulatory engagement: Integration of model explainability and documentation standards to meet supervisory expectations.

- People and skills: Build teams with the right mix of innovative thinking and risk data science expertise, supported by DevOps, cybersecurity leaders and analysts who can review outputs, make high-level decisions and handle exceptions.

Best Practices for Responsible AI Use in Banking

Leading banks are developing integrated AI control frameworks that bring governance, compliance and data management together under a unified model. They are aligning governance across risk functions to establish clear ownership and accountability, while enforcing explainability (global and local) standards that make AI decisions auditable and transparent. Robust model development standards and validation, continuous monitoring, tuning and calibration are all part of a control lifecycle that supports ongoing AI adoption, in partnership with strict ethical standards for selecting use cases for the implementation of AI.

Rigorous bias and model assurance testing is conducted before high-impact deployments, complemented by secure GenAI environments designed to prevent data prompt manipulation and leakage. Continuous training reinforces responsible use and underscores the importance of human judgment in every stage of the AI lifecycle. Finally, constant, dynamic monitoring ensures early detection of model drift, output inconsistencies or unintended consequences. When executed consistently, these practices transform risk management from a constraint into a catalyst for innovation.

Now Is the Time To Act

Now is the time for banks to translate intention into action. Those who haven’t are already behind, and those who don’t will not catch up. Leadership teams should start by taking inventory of current AI initiatives and assessing where governance, data quality and oversight controls are strongest, and where gaps remain. Establishing cross-functional AI governance councils, aligning policies across risk, compliance and technology, and piloting responsible-use frameworks in priority use cases can produce progress quickly. Firms also must invest in education and transparency, ensuring that both employees and customers understand how AI decisions are made and supervised.

When looking for an example of where to start, banks can look at what the U.S. Securities and Exchange Commission in response to the mandate for accelerating the federal government’s use of AI set forth in Memorandum 25-21 published by the U.S. Office of Management and Budget.1 While high-level and not necessarily banking-specific, it provides guidance regarding how a regulatory agency is setting a course for the use and governance of AI.

AI gives banks extraordinary power to detect fraud, serve customers better, operate at new levels of efficiency and innovate across product offerings. The institutions that succeed will be those that pair ambition with accountability, embedding risk management at the heart of AI operations. The future of banking innovation depends not just on what AI can do, but how responsibly we choose to use it.

Footnote:

1: Szczepanik, Valerie, “OMB Memorandum M-25-21 Compliance Plan,” U.S. Securities and Exchange Commission, (Sept. 2025).

Published

January 19, 2026

Key Contacts

Key Contacts

Senior Managing Director

Senior Managing Director

Managing Director

Most Popular Insights

- Beyond Cost Metrics: Recognizing the True Value of Nuclear Energy

- Finally, Pundits Are Talking About Rising Consumer Loan Delinquencies

- A New Era of Medicaid Reform

- Turning Vision and Strategy Into Action: The Role of Operating Model Design

- The Hidden Risk for Data Centers That No One is Talking About